[#29] AI Evolution: From AlexNet to Generative AI – Redefining the Paradigm of Software Development

Tracing the Journey of Neural Networks: Transformative Shifts from Deep Learning to the Dawn of Generative Intelligence

From AlexNet to Generative AI: Revolutionizing AI and Software Development

Introduction: The Dawn of a New Era in Computing

The Paradigm Shift in Software Development

The last decade has witnessed a seismic shift in computing. Traditional programming, with its rigid code and rule-based logic, has been upended by the rise of AI and machine learning. This transition from code-driven to data-driven development represents not just a technological evolution but a fundamental change in how we solve problems and create software.

Part 1: AlexNet – The Spark That Ignited the Deep Learning Fire

AlexNet: More Than a Winner

In 2012, the world of AI was forever changed by a neural network named AlexNet. Developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton for the ImageNet challenge, AlexNet didn't just win; it dominated, surpassing its competitors by a margin previously unthinkable. This victory was a clear signal: deep learning had arrived.

Rethinking Software Development

Before AlexNet, software development was a manual, rule-based process. Programmers wrote code for specific tasks, and machines followed these instructions. AlexNet introduced a new paradigm: you showed a model the examples of what you wanted and it then taught itself to recognize patterns from data, making decisions based on patterns it discerned, a stark contrast to the traditional programming approach.

The Legacy of AlexNet

Data-Driven Learning

AlexNet's success underscored the power of data in programming. This model was trained on millions of images, learning to identify patterns and features that define various objects. It marked the beginning of an era where data quantity and quality became crucial to software capabilities.

Some of the implications of the Data-Driven Approach include:

Data Dependence: Neural networks' performance is directly tied to the data they are trained on. The quality and quantity of data become crucial, highlighting challenges in data collection and management.

Generalization vs. Explicit Instructions: Neural networks, unlike traditional software, learn to generalize from examples. This shift leads to innovative solutions but also introduces unpredictability and unexpected behaviors.

Testing and Validation: Testing neural networks is complex, requiring validation on unseen data. This unpredictability demands comprehensive testing methodologies to ensure robustness and reliability.

Development Time: Training neural networks is resource-intensive. However, once trained, they can perform complex tasks efficiently, highlighting a trade-off between initial resource investment and long-term efficiency.

GPU Acceleration and Its Impact

AlexNet also popularized the use of GPUs (Graphics Processing Units) for deep learning. The parallel processing capabilities of GPUs were pivotal in handling the massive computations required by neural networks, setting a new standard for AI research and development.

An interesting set of implications it had here were:

This is going to change the way computing is done.

This is going to change the way software is going to be written.

This was also going to change the kind of applications we would be able to write.

Part 2: The Deep Learning Era – Complex Inputs, Simple Outputs

Deep Learning's Early Days

Post-AlexNet Innovations

The period following AlexNet saw a flurry of deep learning advancements. Innovations like GoogleNet and VGG significantly improved the handling of complex inputs, such as high-resolution images, leading to more accurate and sophisticated outputs.

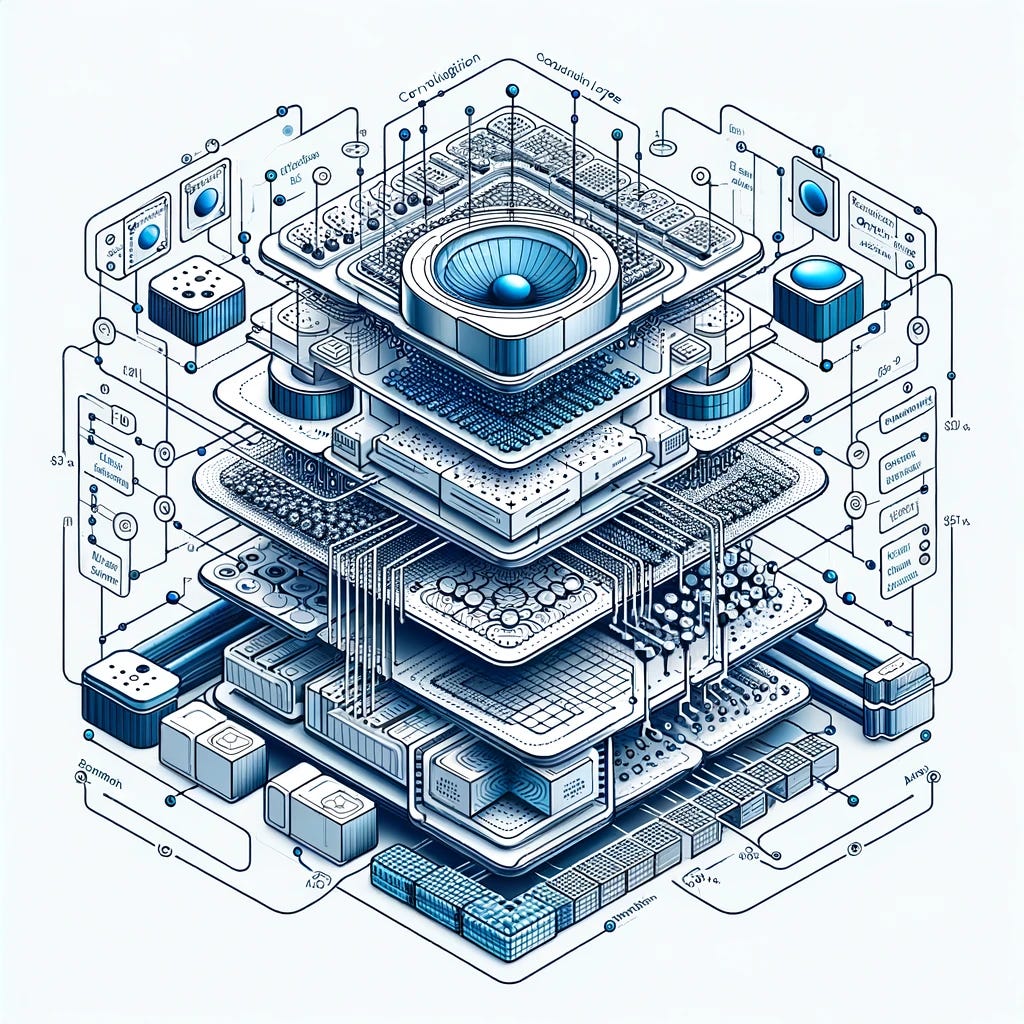

Evolution of Deep Learning: Stages, Characteristics, and Impact

The Limitations of Early Deep Learning Models

Specialization and Resource Intensity

While these models marked a leap forward, they were constrained by their need for specialization and high resource demand. Their development and training required substantial computational power and expert knowledge, making them less accessible.

Flexibility and Generalization

One of the critical limitations was the lack of flexibility. These early models were excellent for the tasks they were designed for but struggled to generalize their learning to different, broader applications.

Towards More Generalized AI

Recognizing these limitations set the stage for the next evolution in AI - a shift towards models capable of handling not just complex inputs but also delivering complex, nuanced outputs. This need led to the development of more versatile and adaptable AI solutions, culminating in the advent of transformers.

Part 3: The Transformer Revolution – Breaking the Mold

A New Era with Transformers

The Introduction of Transformers

In 2017, the introduction of transformers marked a significant turning point in the field of AI. Developed by researchers at Google, the transformer model featured a novel mechanism known as 'attention', which allowed the model to process different parts of the input data in parallel, a drastic shift from the sequential processing of previous architectures.

The Power of Attention Mechanisms

The attention mechanism enabled transformers to handle long-range dependencies more efficiently, making them particularly effective for tasks involving sequential data, such as natural language processing (NLP).

Broadening the Horizons of AI Applications

Beyond NLP: A Versatile Tool

While initially designed for NLP, the transformer architecture proved to be incredibly versatile, finding applications in various fields beyond language, including computer vision, recommendation systems, and more.

Impact on AI Development

Transformers reduced the need for task-specific architectures, offering a more flexible and generalizable framework. This not only simplified the AI development process but also opened up new possibilities for cross-domain applications.

Part 4: Generative AI – The Frontier of Complexity

The Rise of Generative AI

From Transformers to Generative Prowess

Building on the transformer architecture, Generative AI represents the latest frontier in AI's evolution. It is primarily powered by Large Language Models (LLMs), which combine several key components: scaling laws, internet-scale data, self-supervised learning, and the transformer architecture itself.

The Components of Generative AI

Scaling Laws: As AI models grow in size, their ability to process and generate complex information scales accordingly. This principle has been crucial in developing more capable and sophisticated models.

Internet-Scale Data: The vast amounts of data available on the internet provide the diverse and extensive training material necessary for these models to learn a wide range of topics and styles.

Self-Supervised Learning: This learning paradigm allows models to learn directly from the raw data without needing explicit annotations, making the training process more efficient and scalable.

Transformer Architecture: The backbone of these models, enabling them to effectively process and generate complex inputs and outputs.

Mapping Complex Inputs to Complex Outputs

The Era of Advanced Generative Models

Generative AI has ushered in an era where the complexity of inputs and outputs processed by AI models has reached unprecedented levels. This advancement is epitomized by the ability of these models to not only interpret intricate data but also to generate complex and diverse outputs, ranging from realistic images to coherent and contextually rich text.

Beyond Traditional Boundaries

In traditional AI models, the focus was predominantly on processing complex inputs to produce relatively simple, often predictable outputs. Generative AI shatters this limitation. For instance, models like GPT-3 can take a simple prompt and generate a full-fledged, contextually appropriate article or engage in nuanced conversation, showcasing an understanding that closely mimics human cognitive abilities.

Creative and Analytical Applications

The implications of this are vast and varied. In creative fields, AI is now producing artwork, music, and literature that resonate with human emotions and aesthetics. In more analytical realms, these models are predicting intricate patterns in data, from financial market trends to potential new medical compounds, with a level of depth and precision that was previously unattainable.

Personalization and Adaptability

One of the most significant advancements is the ability of these models to personalize outputs. From AI-driven personalized learning tools that adapt to a student's learning style to customer service bots that provide tailored responses, the capacity of AI to understand and respond to specific needs is transforming user experiences.

Real-World Problem Solving

Generative AI is also making strides in addressing complex real-world problems. For example, in environmental science, AI models are used to simulate and predict the effects of climate change, taking into account an array of complex variables. In healthcare, AI is aiding in the creation of personalized treatment plans based on a patient’s unique genetic makeup and medical history.

Conclusion: Navigating the Business Horizon in the AI Revolution

As we witness the transformative journey from AlexNet to the sophisticated landscape of Generative AI, it's clear that the business world stands on the cusp of a new era. This evolution in AI isn't just a technical milestone; it heralds a paradigm shift in how businesses operate, innovate, and interact with customers.

The Strategic Imperative of Generative AI

The advent of Generative AI, with its ability to process and generate complex outputs, opens up unprecedented opportunities for businesses across sectors. From personalized marketing and customer experience enhancement to advanced predictive analytics in finance and supply chain optimization, AI is poised to redefine the competitive landscape.

Reshaping Business Models

In this new era, the agility to adapt and the vision to leverage AI strategically will become key differentiators for businesses. Companies that harness the creative and analytical power of Generative AI will not only streamline operations but also unlock new avenues for innovation and growth. The integration of AI into business strategies is no longer an option; it's a necessity for staying relevant.

I’ll be exploring these ideas in future blog posts.

If you found this piece useful or interesting, don't hesitate to share it with your network.

If this was shared with you and you liked the content, do consider subscribing below to receive the next piece directly.